Last week, I wrote about Project Sonar as an excellent source for reconnaissance tasks. In this post, I want to generalize and talk about a framework for discovering assets of some particular entity (enterprise, university, ...). When is it useful?

- Penetration testing — You get a loose scope for your assessment. Your first goal should be to find what machines and services do your target expose properly.

- Bug bounty hunting — The same as one above. Some bug bounty programs don't explicitly list all targets (usually domains). You often need to do it yourself.

- Regular "hygiene" — Sometimes, companies leave services and application exposed to the Internet. These services are often not updated, might contain bugs with public exploits, or the applications were not meant to be exposed publicly. That company may be yours.

Domains

Put simply; a domain name represents some label for IP addresses on the Internet. Because companies move their infrastructure to cloud, we need to "find a needle in a haystack" or in our context "find companies servers in the cloud's IP address pool". Domains, therefore, provide some sort of a link to IP addresses.

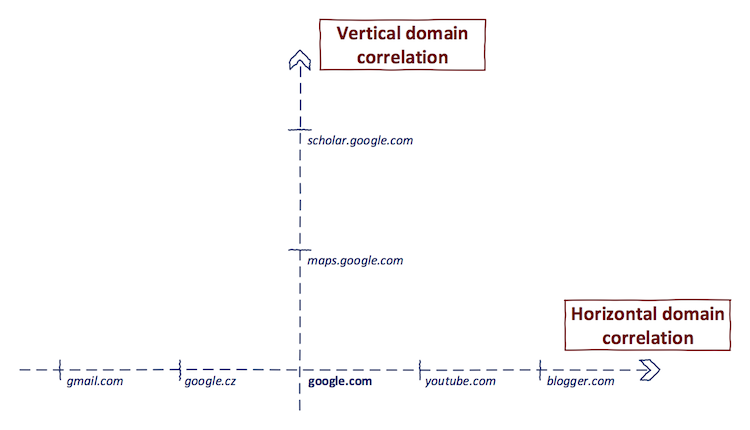

Our goal is to find/correlate all domain names owned by a single entity of our interest. We will achieve this step-by-step with vertical and horizontal domain correlation. In the following text, a word target denotes the entity of interest in the correlation process.

-

Vertical domain correlation — Given the domain name, vertical domain correlation is a process of finding domains share the same base domain. This process is also called subdomain enumeration 1.

-

Horizontal domain correlation — Given the domain name, horizontal domain correlation is a process of finding other domain names, which have a different second-level domain name but are related to the same entity 1.

For demonstration, I've selected eff.org as the target.

Step 1: Perform vertical correlation on eff.org

This can be achieved using tools such as Sublist3r, amass, or aquatone. Note that there are many redundant open-source tools for subdomain enumeration that provide poor results. From my experience, it is best to use "meta-subdomain enumeration" which combines results from multiple enumeration services (such as tools mentioned above).

Sample (stripped) output from Sublist3r:

...

observatory.eff.org

observatory6.eff.org

observatory7.eff.org

office.eff.org

outage.eff.org

owncloud.eff.org

panopticlick.eff.org

projects.eff.org

push.eff.org

redmine.eff.org

robin.eff.org

s.eff.org

...

Step 2: Perform horizontal correlation on eff.org

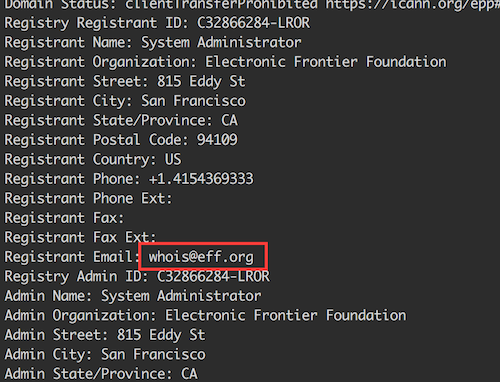

This step is a little bit tricky. Firstly, let's think about it. We cannot rely on a syntactic match as we did in the previous step. Potentially, abcabcabc.com and cbacbacba.com can be owned by the same entity. However, they don't match syntactically. For this purpose, we can use WHOIS data. There are some reverse WHOIS services which allow you to search based on the common value from WHOIS database. Let's run WHOIS for eff.org:

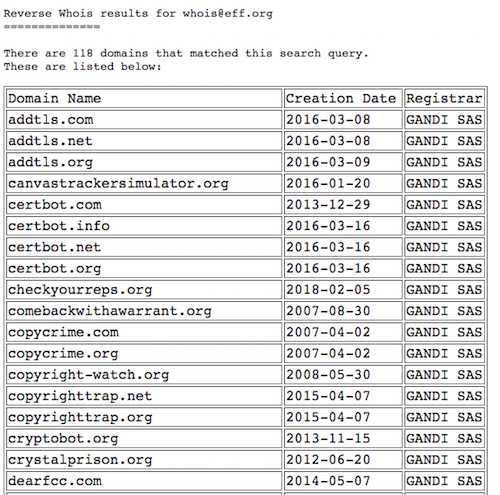

As you can see, an e-mail address is provided as Registrant contact. Now, we can do a reverse WHOIS search to reveal other domains with the same Registrant email:

For reverse WHOIS, I used viewdns.info service. Tools such as domlink or amass can be used for horizontal domain correlation as well.

Step 3: Iterate

Identify most interesting domains from step #2 and run a vertical correlation on them.

At this stage, you should have a pretty extensive list of domain names linked to your target.

IP Addresses

If you are lucky, your target will have a dedicated IP address range. The easiest way to check is to run IP to ASN translation on three IP addresses found from domain names:

eff.org:

$ dig a eff.org +short

69.50.232.54

$ whois -h whois.cymru.com 69.50.232.54

AS | IP | AS Name

13332 | 69.50.232.54 | HYPEENT-SJ - Hype Enterprises, US

certbot.eff.org:

$ dig a certbot.eff.org +short

lb5.eff.org.

173.239.79.196

$ whois -h whois.cymru.com 173.239.79.196

AS | IP | AS Name

32354 | 173.239.79.196 | UNWIRED - Unwired, US

falcon.eff.org:

$ dig a falcon.eff.org +short

mail2.eff.org.

173.239.79.204

$ whois -h whois.cymru.com 173.239.79.204

AS | IP | AS Name

32354 | 173.239.79.204 | UNWIRED - Unwired, US

In this case, it seems that EFF.org doesn't have dedicated IP space (we might debate that it is UNWIRED, however it is likely that it will cover other entities as well). As a counterexample, let's look at Google:

$ dig a google.com +short

mail2.eff.org.

173.239.79.204

$ whois -h whois.cymru.com 216.58.210.14

AS | IP | AS Name

15169 | 216.58.210.14 | GOOGLE - Google LLC, US

As you can see, Google operates on AS15169 (which is one of their AS).

Having a dedicated IP range makes things easier: We know that the company owns IP ranges listed in the AS. Using this information, we can compile a list of IP addresses from CIDR notation.

If the target doesn't have dedicated space, we will need to rely on domain names compiled in the previous step. From these, we will resolve IP addresses. Even if the target has a dedicated IP range, I recommend following the process below. There is a good chance that part of its infrastructure is already running in the cloud.

Note that there is a high chance of false positives using this approach. Your target might use shared hosting, e.g., for a landing page. IP address of this host will be included in your list. However, this address is not dedicated to your target.

For DNS resolution in this context, I recommend massdns. In will resolve domain names in the compiled list to the IP addresses from their corresponding A records:

./massdns -r lists/resolvers.txt -t A -q -o S domains.txt

| awk '{split($0,a," "); print a[3]}'

| sort

| uniq

This will produce a list of IP addresses corresponding to the target's FQDNs. You can then append this result set to IP addresses from CIDR blocks (if any).

At this stage, you should have a list of IP addresses (hopefully) linked to your target.

Services

Now to the most interesting part. The reason for collecting domain names and the IP addresses is to reveal what services (applications) are the target exposing to the Internet. For this purpose, we need to scan the hosts.

We have two options:

-

Active scanning — Traditional nmap approach. For a large list of hosts, I also recommend masscan. Active scanning is more time consuming and can potentially trigger publicly facing IDS. However, you get a most accurate representation of opened services.

-

Passive scanning — Relies on data that were gathered from another source. These sources include for instance Shodan or Censys. The downside is that the results might be several days old and some services can be already closed. On the other side, you are not directly connecting to the target network. This "stealth" mode is usually preferred when doing APT simulations. You need to find a balance between freshness and aggressiveness.

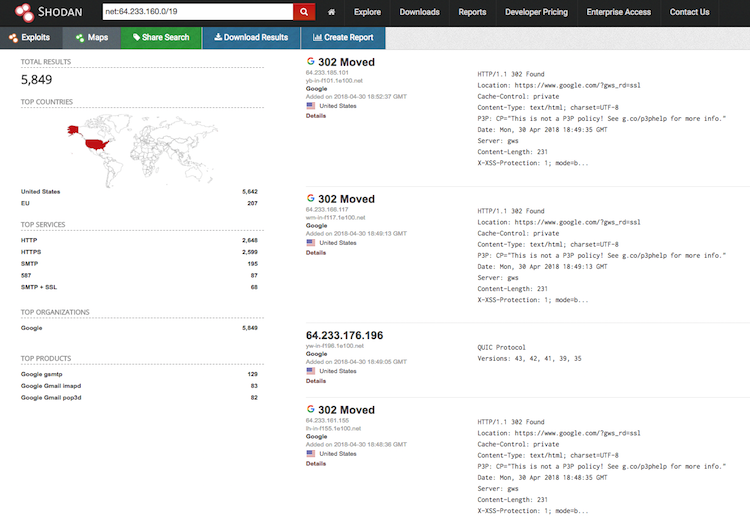

Shodan offers pretty neat dorks for this purpose. We can search specified IP range like this:

net:64.233.160.0/19

Even better, we can filter based on the organization in the WHOIS database:

org:"Google"

Note that this search will also include "Google Cloud", "Google Fiber", etc. which are not part of AS15169. I haven't managed to filter only on "Google".

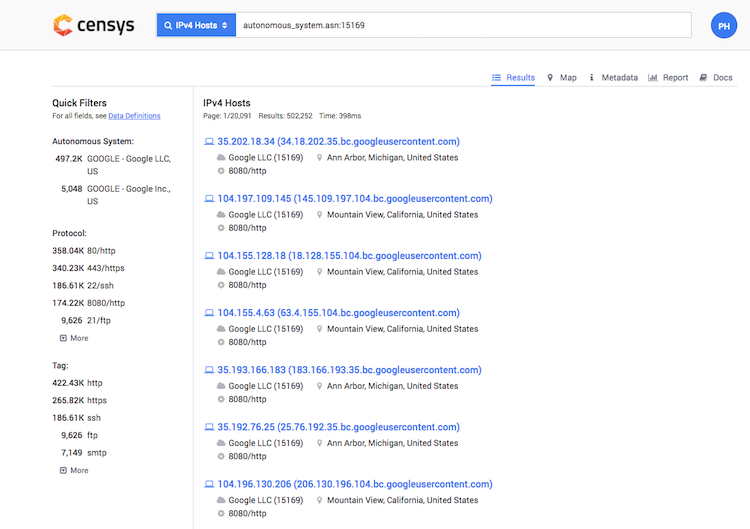

Censys offers pretty much the same functionality:

ip:64.233.160.0/19

And for organization/ASN filter:

autonomous_system.asn:15169

or

autonomous_system.organization:"Google Inc."

For full tutorial on Censys, check out my other post.

For the full stealth mode, you can use Project Sonar to retrieve everything from domains to open ports.

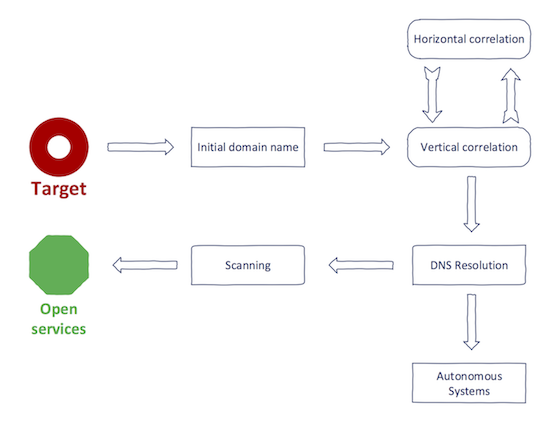

Putting It Together

At this point, you should have very good visibility in your target. Your process of achieving it looked something like this:

The final set should contain tuples (IP, port) which belong to the target and are opened.

You can still perform post-processing tasks to reveal the most interesting services quickly. For instance, you might run a mass website screenshot tool such as Snapper which will provide an excellent overview of running websites in a single place. Similar results can be achieved for VNC, or RDP (EyeWitness is a swiss army knife for that).

Additional resources:

Until next time!